This is fairly common question in the threads; but looking forward with all these GPU launches, where do you think the price to performance sweetspot is going to lie amongst the competitiors?

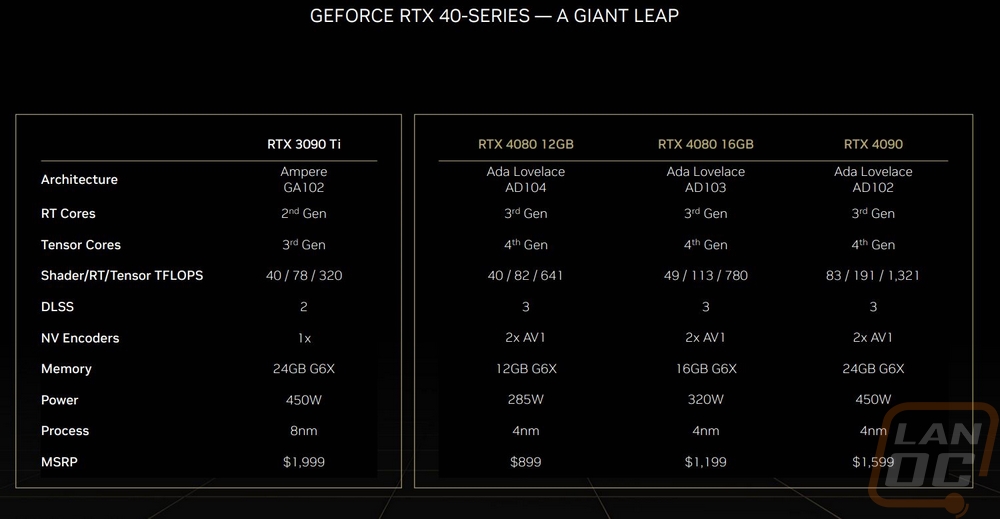

NVidia's RTX 4090 looks like the current king with ~16400 CUDA cores, but will DFL also leverage the massive uplift in Tensor Cores? The current pricing and additional hardware requirements also puts the 4000 Series far from reach for a lot of us currently.

AMD's RX 7000 Series is speculated to come with its own variation of Tensor cores and Performance uplifts. Traditionally AMD GPUs were priced competitively but fell behind on DFL performance. Perhaps the DFL will leverage the newer chips better.

Intel's ARC GPUs are priced well currently and have good ML performance in apps like Topaz Video Enhance with their MXM cores. Plus the 16GB of VRAM at 350$ is superb. Has anyone tried DFL with these new chips?

NVidia's RTX 4090 looks like the current king with ~16400 CUDA cores, but will DFL also leverage the massive uplift in Tensor Cores? The current pricing and additional hardware requirements also puts the 4000 Series far from reach for a lot of us currently.

AMD's RX 7000 Series is speculated to come with its own variation of Tensor cores and Performance uplifts. Traditionally AMD GPUs were priced competitively but fell behind on DFL performance. Perhaps the DFL will leverage the newer chips better.

Intel's ARC GPUs are priced well currently and have good ML performance in apps like Topaz Video Enhance with their MXM cores. Plus the 16GB of VRAM at 350$ is superb. Has anyone tried DFL with these new chips?